A brief historical survey of European state-of-play, the open issues and the ProCAncer-I approach

Over the last decade, Artificial Intelligence (AI) solutions to support the whole value-chain of medical imaging[1] has experienced a considerable acceleration. This progress notably relies on the increased availability of imaging datasets and the impressive performance of data driven methods, spearheaded by the deep learning methodologies as well as the radiomics and multiomics studies. ProCAncer-I itself is expected to sensibly contribute to the field, with its AI-powered, quality-controlled and GDPR[2]-compliant platform, integrating imaging data and AI models for precision care in prostate cancer.

Nevertheless, considering the high impact that AI-driven applications may have on clinical workflows and the still-open challenges and hindrances to their uptake in practice, all stakeholders agree on the need for robust and regulatory frameworks to steer a positive impact. Only this way, we can expect to eventually realise the full benefits of AI in medical imaging and in healthcare as a whole. The 2020’s joint report by EIT Health and McKinsey & Company clearly highlighted the existence of legislative gaps and the need for new legislation[3].

It was to respond to this kind of demands and concerns that the European Commission (EC) has taken action on a more general societal basis. In 2018, with its first Communication “Artificial Intelligence for Europe”[4], the Commission put forward a strategy for AI, by promoting a European approach based on excellence and trust. Such an approach seeks to maximise the benefits of AI, while preventing and minimising any risks that may affect the public sector, markets and businesses as well as people’s safety and fundamental rights. In April 2019, the Commission-appointed High-Level Expert Group (HLEG) on AI nurtured this strategy by publishing the “Ethics Guidelines for Trustworthy AI”[5]. These propose seven key principles or requirements to guarantee the trustworthiness of an AI model or system. They comprise:

- human agency and oversight, through human-in-the-loop and human-centred approaches

- technical robustness and safety, based on accuracy, reliability and reproducibility

- privacy and data governance, relying on the preservation of privacy, data quality and integrity as well as on a legitimised access, use and reuse of data

- transparency, in terms of the traceability of data provenance and model development as well as of the explainability of any AI-based decisions

- diversity, non-discrimination and fairness

- societal and environmental well-being

- accountability, in terms of responsibility and auditability of AI models and systems.

Moving a step forward in 2020, the EC issued a first “White Paper on AI”, by incorporating these seven principles and promoting an extensive stakeholder consultation. This resulted in the first ever legal framework on AI, the so-called “Proposal for a Regulation of The European Parliament and of the Council Laying down Harmonised Rules on Artificial Intelligence” or “The Artificial Intelligence Act” (AIA)[6]. The AIA is the world’s first concrete initiative to regulate AI development, and fall within the European strategy to regulate the digital sector. It makes the pair with the GDPR and the “Data Governance Act”[7].

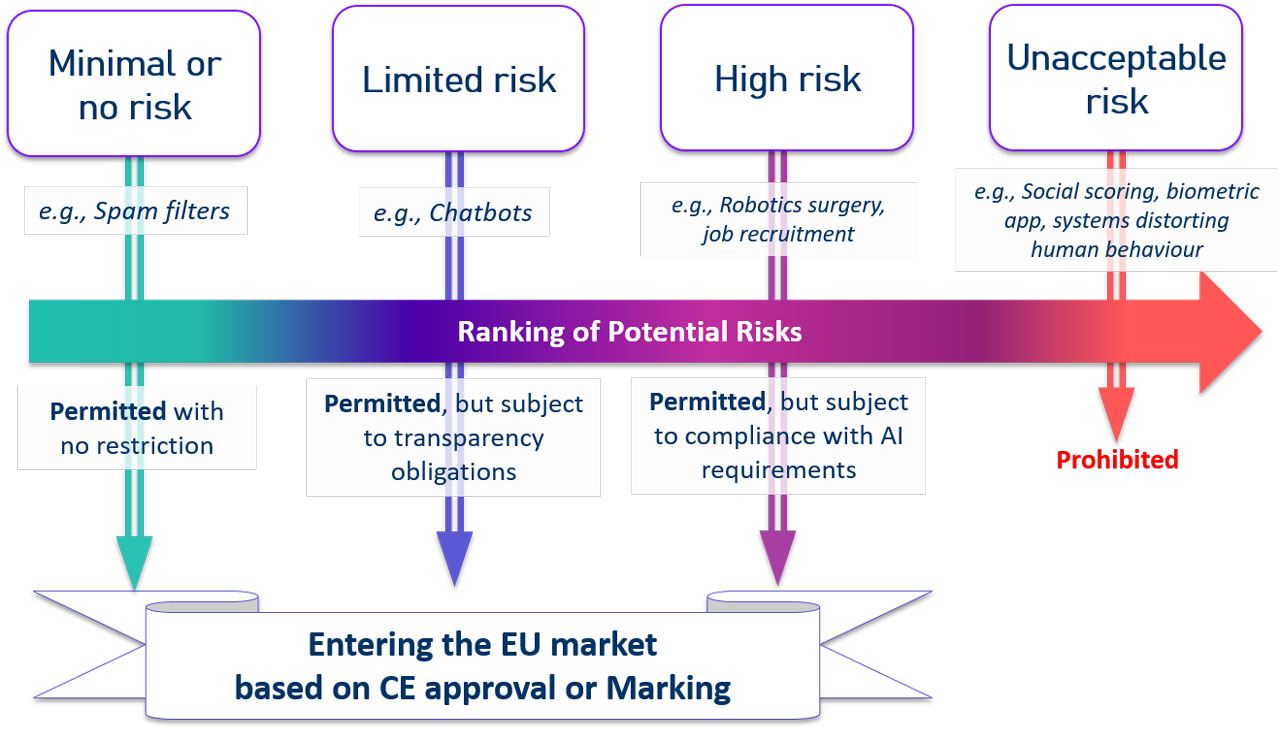

The AIA aims to provide AI developers, deployers and users with clear requirements and obligations, in order to ensure that European citizens can trust AI-powered applications. A risk-based methodology has been laid down to limit significant threats to the health and safety or the fundamental rights of persons. The approach categorises AI systems considering four risk tiers: (i) unacceptable risk, (ii) high risk, (iii) limited risk, and (iv) minimal or no risk (see Figure 1). According to the risk level they raise, the AI systems will have to comply with a set of horizontal mandatory requirements for trustworthy AI and to follow conformity assessment procedures before entering the Union market. The foremost focus of the AIA is on the high-risk AI systems.

Figure 1. An illustration of the risk-based methodology proposed by the EU Artificial Intelligence Act

In fact, the class of AI systems that have an unacceptable risk level (e.g., social scoring or real-time remote biometric identification systems) is simply totally banned. The use of applications with minimal or no risk (e.g., AI-enabled video games or spam filters) is free of obligations. The AI systems that may have limited risks are subject only to specific transparency obligations (e.g., when interacting with a Chabot, this should be clear and notified to the user). Whilst high-risk AI systems will be subject to strict technical, monitoring, and compliance obligations before they can enter the market. The key obligations comprise:

- adequate risk assessment and mitigation systems

- high quality of the datasets feeding the system to minimize risks and discriminatory outcomes

- logging of activity to ensure traceability of results

- detailed documentation providing all information necessary on the system and its purpose for authorities to assess its compliance

- clear and adequate information to the user

- appropriate human oversight measures to minimize risk

- high level of robustness, security, and accuracy.

As high-risk AI systems are considered those products that are already subject to other EU safety regulations (e.g., medical devices[8]) and those specifically enlisted in the AIA, such as the systems for the management and operation of critical infrastructures, for educational and vocational training, and for post remote biometric identification.

The Commission has clearly declared its intent to establish a balanced, cross-sector regulation, avoiding the duplication of frameworks and any undue limitations or market-hindrance to the technological advances. Nevertheless, a consultation has been launched to receive feedback on the AIA. The preliminary responses coming from the med-tech scientific, clinical and industrial communities are stressing the need for a coordinated and definite regulations taking into account the specificities of the healthcare field[9], and the concerns on potential misalignment or duplication of regulations[10].

Although preceding the AIA, ProCAncer-I’s conception has taken duly into account the EU guidelines and recommendations on AI available at that time, as well as the provisions of other regulations8 set by health-authorities on medical devices and software as a medical device. In this respect, significant effort has been planned to establish the ProCAncer-I regulatory framework, which provides guidance towards the delivery, validation, and qualification of ethical-aware, legal and GDPR-compliant as well as trustworthy, safe, robust, and traceable AI models. Work Package (WP) 2 is entirely devoted to elaborate on the ethical and legal aspects to establish the legal framework for accessing and processing clinical data. WP4 is taking care to ensure the provenance transparency and quality-curation of the imaging and non-imaging datasets. WP4 is also providing the solutions for the assessment and monitoring of risks as well as for the continuous performance monitoring of algorithms. This monitoring is linked with an audit trail layer, which will guarantee traceability of all the processes involved in the execution of the AI models. Finally, WP6 encompasses concerted efforts to ensure the trustworthiness of the AI-powered solutions, by working on: (i) fairness and privacy preservation, (ii) safety and robustness, (iii) explainability and interpretability, and (iv) reproducibility and verifiability. All activities are taking advantage of a strict cooperation between developers (e.g. computer scientists and engineers) and domain experts/end-users (e.g. clinicians). This cooperation enables a clear definition of performance metrics to assess the value/efficiency of the AI framework in terms of trustworthiness.

Further to the work planned in the Description of the Action, ProCAncer-I is cooperating with the other projects funded under the H2020 call SC1-FA-DTS-2019-1 to define common strategies to develop, regulate, and validate AI models in medical imaging. The first result of this collaboration has been the drafting of six principles that should guide future AI developments in medical imaging[11]. The final goal is to ensure increased trust, safety and adoption.

[1] Pesapane, F., Codari, M. & Sardanelli, F. Artificial intelligence in medical imaging: threat or opportunity? Radiologists again at the forefront of innovation in medicine. Eur Radiol Exp 2, 35 (2018). https://doi.org/10.1186/s41747-018-0061-6

[2] European Commission, Regulation (EU) 2018/1725 of the European Parliament and of the Council of 23 October 2018 on the protection of natural persons with regard to the processing of personal data by the Union institutions, bodies, offices and agencies and on the free movement of such data, and repealing Regulation (EC) No 45/2001 and Decision No 1247/2002/EC

[3] EIT Health and McKinsey & Company joint report “Transforming healthcare with AI. The impact on the workforce and organisations”, 2020 – https://tinyurl.com/rvwfw3cw

[4] EC’s Communication “Artificial Intelligence for Europe”, COM(2018)237 – https://tinyurl.com/39mvfy6d

[5] HLEG on AI, “Ethics Guidelines for Trustworthy AI” – https://tinyurl.com/4tej3t38

[6] EC “Proposal for a Regulation of the European Parliament and of the Council Laying Down Harmonised Rules on Artificial Intelligence (Artificial Intelligence Act)” COM/2021/206 final – https://tinyurl.com/4aa9d6e7

[7] European Commission, “Regulation of the European Parliament and of the Council on European data governance (Data Governance Act)”, 2020 – https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX%3A52020PC0767

[8] EC, “Regulation (EU) 2017/745 of the European Parliament and of the Council of 5 April 2017 on medical devices”, 2017 – https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX%3A32017R0745

[9] ESR’s feedback to AI Act – https://tinyurl.com/envzbud2

[10] MedTech Europe response to the open public consultation on the Proposal for an Artificial Intelligence Act (COM/2021/206) – https://tinyurl.com/3ck4tpx9

[11] Lekadir, K. et al. “FUTURE-AI: Guiding Principles and Consensus Recommendations for Trustworthy Artificial Intelligence in Medical Imaging”, arXiv, 2021 – https://arxiv.org/pdf/2109.09658.pdf