Βy Prof. Daniele Regge [Chief of the Radiology Unit, Candiolo Cancer Institute, Torino-Italy] and Simone Mazzetti [Candiolo Cancer Institute, Torino-Italy]

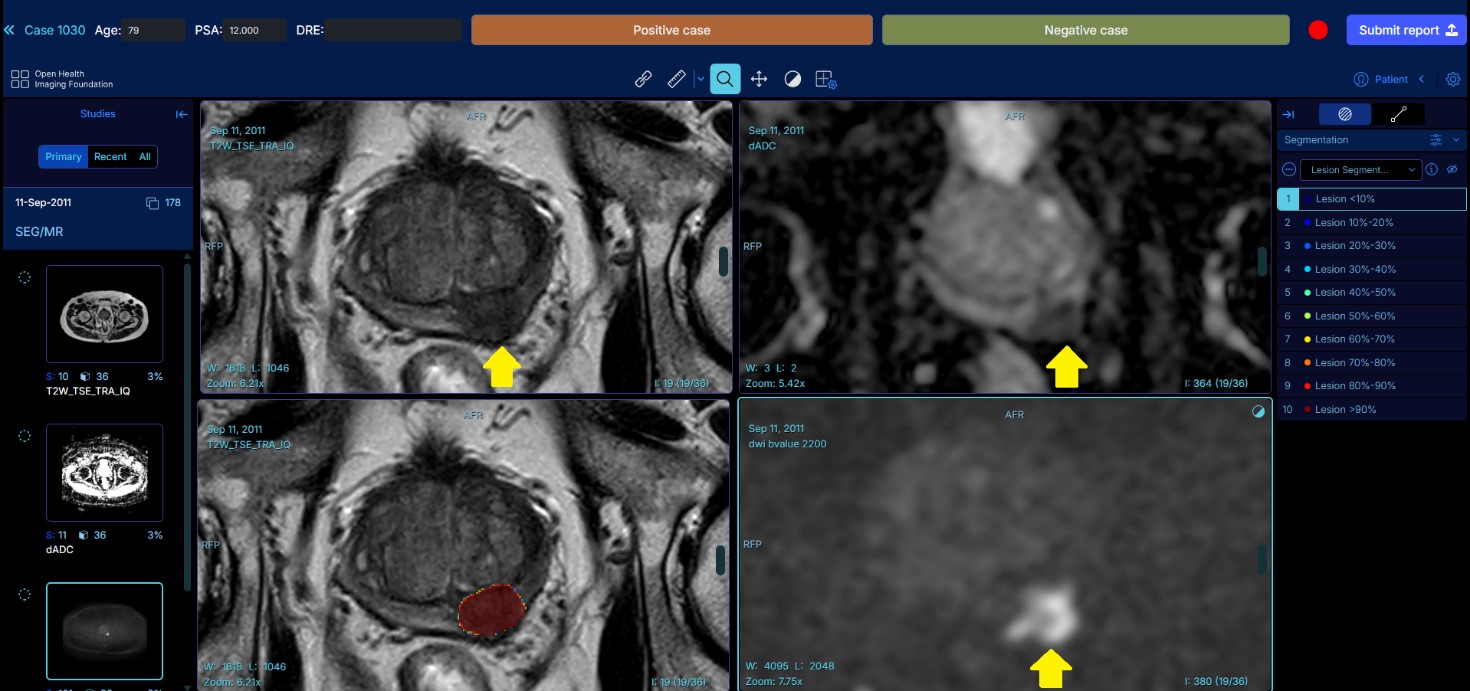

The clinical validation study was designed to assess the diagnostic performance of artificial intelligence (AI) models when applied in a simulated clinical environment by radiologists, to identify prostate cancer lesions on magnetic resonance imaging (MRI). Two reading sessions were conducted: the first was unaided, meaning that readers assessed the MRI examinations without AI assistance, while the second session was AI-assisted, where readers could use the output of AI models highlighting the location and extent of potential prostate lesions (see Figure).

Example of the reading platform used for the clinical validation. The prostate lesion is highlighted by the AI software in red in the lower-left window. The yellow arrow points to the prostate lesion in the remaining three series.

The objective was to evaluate the impact and interaction between readers and AI tools in terms of diagnostic accuracy, lesion detection sensitivity, and reporting times. Within the ProCancer-I project, two AI models for prostate cancer detection on MRI were developed and underwent clinical validation: one from the Candiolo Cancer Institute (Turin, Italy) and the other from the Champalimaud Foundation (Lisbon, Portugal).

A total of 320 anonymized MRI examinations, with a cancer prevalence of 50%, and balanced for MRI vendors and lesions size, were selected from the ProstateNET dataset. These exams were assigned to 20 radiologists from 10 Clinical Partners within the ProCancer-I Consortium, representing a wide range of experience levels in prostate imaging reporting.

The validation study began in December 2024, with radiologists independently reviewing the MRI scans without AI assistance. Each reader assessed 64 cases, identifying and annotating suspicious lesions, assigning PI-RADS scores, and reporting their level of diagnostic confidence. After a 6-week interval, intended to minimize recall of prior assessments, the AI-assisted session was conducted. Between February and March 2025, the same radiologists re-evaluated the 64 MRI scans, this time supported by AI: 32 cases with the Candiolo model, and 32 with the Champalimaud Foundation model.

Performance metrics included overall sensitivity, specificity and reporting times, with and without AI assistance. Additional analyses stratified the results by lesion aggressiveness, size, location and MRI vendor.

The results demonstrated a significant improvement in lesion detection sensitivity among readers whose baseline (unaided) sensitivity was below 80%, along with a reduction in reporting times of approximately 16% (120 seconds unaided vs 100 seconds AI-aided).

| mean unassisted sensitivity | mean assisted sensitivity | Δ | p | |

| readers with unassisted sensitivity <50% |

43% | 58% | +15% | 0.018 |

| readers with unassisted sensitivity between 50-80% |

62% | 69% | +7% | 0.035 |

| readers with unassisted sensitivity ≤80% |

57% | 66% | +9% | 0.003 |

| readers with unassisted sensitivity >80% |

88% | 77% | -11% | 0.059 |

The clinical validation study concludes that AI-assisted reading can enhance radiologist performance in prostate MRI interpretation, especially for those with a lower baseline sensitivity. These findings support the integration of AI tools into routine clinical workflows to improve readers diagnostic accuracy, particularly for the less experienced readers.